Advising Reasonable AI Criticism

We're the good guys. They're the bad guys.

A loose analysis of the unproductive criticism surrounding artificial intelligence from both pro and anti camps, with advocations for more nuanced, constructive engagement and how that can be achieved to allow more informed and respectful discussions about AI technology and its impact.

https://vale.rocks/posts/ai-criticism

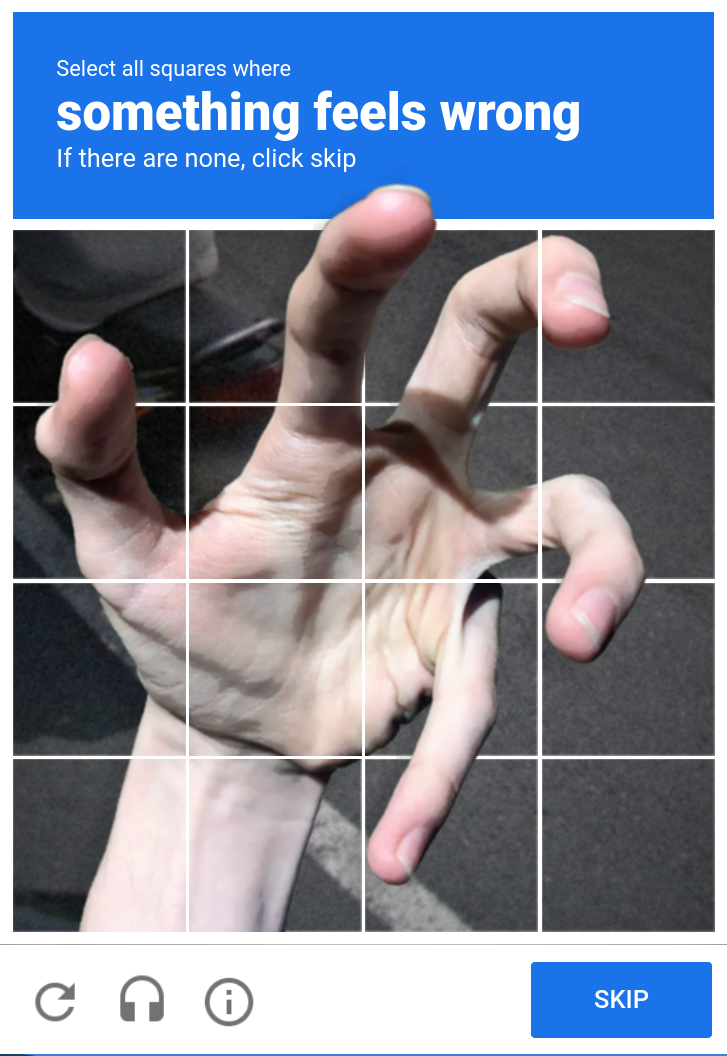

The Size of My xG3 v2 Magnet Implant

Showcase of the size of the xG3 v2 bio-magnet within my hand with the help of a to-scale 3D-printed analogue.